[WORK NODE & MASTER NODE]

=============================================================================================

방화벽 off

개발서버라면 아래의 방법으로 방화벽을 disable 해주면 된다.

# sudo systemctl stop firewalld && sudo systemctl disable firewalld

# sudo setenforce 0

# sudo sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

=============================================================================================

운영서버라면 아래에서 설명하는 방식으로 필요한 포트만 오픈해 준다.

# swapoff -a

# sudo swapoff -a

# sudo sed -i -e '/swap/d' /etc/fstab

=============================================================================================

hosts 파일 수정(옵션)

# vi /etc/hosts

---------------------------

172.16.0.101 node-01

172.16.0.102 node-02

172.16.0.103 node-03

172.16.0.104 node-04

172.16.0.105 node-05

---------------------------

=============================================================================================

Docker 설치

RockyLinux 는 통상적인 CentOS 방식으로 설치가 되지 않고 아래 방식을 설치해야 한다.

# sudo dnf config-manager --add-repo=https://download.docker.com/linux/centos/docker-ce.repo

# sudo dnf update

# 충돌나는 패키지를 교체한다.(ex, podman)

# sudo dnf install -y docker-ce docker-ce-cli containerd.io --allowerasing

# docker --version

# sudo systemctl enable docker

# sudo systemctl start docker

# sudo systemctl status docker

=============================================================================================

# sudo mkdir -p /etc/docker

# cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

# sudo systemctl daemon-reload

# sudo systemctl restart docker

=============================================================================================

containerd 설치

# cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

# sudo modprobe overlay

# sudo modprobe br_netfilter

# cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# sudo sysctl --system

=============================================================================================

# cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

####### 2024-02-21 ############# 아래와 같이 해야 설치 되었습니다.

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

##########################################################

####### 버전 지정 #############################################

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.27/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.27/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

sudo yum install -y kubelet-1.27.3 kubeadm-1.27.3 kubectl-1.27.3 --disableexcludes=kubernetes

#############################################################

# sudo dnf -y install kubelet kubeadm kubectl --disableexcludes=kubernetes epel-release

# sudo systemctl enable --now kubelet

# sudo mkdir -p /etc/containerd

# containerd config default | sudo tee /etc/containerd/config.toml

=============================================================================================

# sudo vi /etc/containerd/config.toml

---------------------------

......

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options]

SystemdCgroup = true

......

---------------------------

# sudo systemctl enable containerd

# sudo systemctl restart containerd

=============================================================================================

1.24버전 쿠버네티스 이후 도커심 지원 X

Cri-docker 로 설치 # cri-docker Install

# VER=$(curl -s https://api.github.com/repos/Mirantis/cri-dockerd/releases/latest|grep tag_name | cut -d '"' -f 4|sed 's/v//g')

# echo $VER

# wget https://github.com/Mirantis/cri-dockerd/releases/download/v${VER}/cri-dockerd-${VER}.amd64.tgz

# tar xvf cri-dockerd-${VER}.amd64.tgz

# sudo mv cri-dockerd/cri-dockerd /usr/local/bin/

cri-docker Version Check

# cri-dockerd --version

# wget https://raw.githubusercontent.com/Mirantis/cri-dockerd/master/packaging/systemd/cri-docker.service

# wget https://raw.githubusercontent.com/Mirantis/cri-dockerd/master/packaging/systemd/cri-docker.socket

# sudo mv cri-docker.socket cri-docker.service /etc/systemd/system/

# cd /etc/systemd/system/

# sudo sed -i -e 's,/usr/bin/cri-dockerd,/usr/local/bin/cri-dockerd,' /etc/systemd/system/cri-docker.service

# sudo systemctl daemon-reload

# sudo systemctl enable cri-docker.service

# sudo systemctl enable --now cri-docker.socket

# vi /var/lib/kubelet/kubeadm-flags.env

KUBELET_KUBEADM_ARGS="--container-runtime-endpoint=unix:///var/run/cri-dockerd.sock --pod-infra-container-image=registry.k8s.io/pause:3.9"

cri-docker Active Check

# sudo systemctl restart docker && sudo systemctl restart cri-docker

# sudo systemctl status cri-docker.socket --no-pager

=============================================================================================

# sudo vi /etc/crictl.yaml

runtime-endpoint: unix:///var/run/cri-dockerd.sock

image-endpoint: unix:///var/run/cri-dockerd.sock

timeout: 2

debug: false

pull-image-on-create: true

# systemctl restart containerd

=============================================================================================

Kubernetes 설치

방화벽 오픈

운영서버라면 아래의 방식으로 마스터/워커 노드의 방화벽을 오픈해 준다.

[MASTER NODE]

# sudo firewall-cmd --add-port={80,443,6443,2379,2380,10250,10251,10252,30000-32767}/tcp --permanent

# sudo firewall-cmd --reload

=============================================================================================

[WORK NODE]

# sudo firewall-cmd --add-port={80,443,10250,30000-32767}/tcp --permanent

# sudo firewall-cmd --reload

=============================================================================================

haproxy 설치(옵션)

1 번 서버에 haproxy 를 설치하고 16443 포트로 들어오는 요청을 1/2/3 번 서버로 분산시켜 줄 수 있다.

# sudo dnf -y install haproxy

# sudo vi /etc/haproxy/haproxy.cfg

rontend kubernetes-master-lb

bind 0.0.0.0:16443

option tcplog

mode tcp

default_backend kubernetes-master-nodes

backend kubernetes-master-nodes

mode tcp

balance roundrobin

option tcp-check

option tcplog

server node1 node-01:6443 check

server node2 node-02:6443 check

server node3 node-03:6443 check

# sudo firewall-cmd --add-port={80,443,6443,2379,2380,10250,10251,10252,16443,30000-32767}/tcp --permanent

# sudo firewall-cmd --reload

# sudo systemctl enable haproxy

# sudo systemctl restart haproxy

=============================================================================================

[MASTER NODE]

Cri-docker.sock 사용으로 설치

###############################

kubeadm init --pod-network-cidr=192.168.0.0/16 \

--cri-socket unix:///var/run/cri-dockerd.sock

###############################

# sudo kubeadm init \

--control-plane-endpoint "node-01:16443" \

--pod-network-cidr=192.168.0.0/16 \

--upload-certs

이걸로 마지막에 했음.

# kubeadm init --pod-network-cidr=172.16.0.0/16

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join node-01:16443 --token qelzz0.dq8fp6bmq3t8ns31 \

--discovery-token-ca-cert-hash sha256:160c9543e023b599d6cb624e15dXXXXXXXXXXXXXXXXXXX \

--control-plane --certificate-key ec722f6286381b4b91f2f8022854XXXXXXXXXXXXX

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join node-01:16443 --token qelzz0.dq8fpXXXXXXXXXXX \

--discovery-token-ca-cert-hash sha256:160c9543e023b599d6cb624XXXXXXXXXXXX

# mkdir -p $HOME/.kube

# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

=============================================================================================

아래 명령을 이용해 추가 마스터 노드를 추가할 수 있다.

# sudo kubeadm join node-01:16443 --token qelzz0.dq8fp6bmq3t8ns31 \

--discovery-token-ca-cert-hash sha256:160c9543e023b599d6cb624e15dXXXXXXXXXXXXXXXXXXX \

--control-plane --certificate-key ec722f6286381b4b91f2f8022854XXXXXXXXXXXXX

# mkdir -p $HOME/.kube

# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl get nodes

# kubectl get nodes 가 Pending 상태이면 아래명령을 수행하자.

# sudo systemctl restart containerd

마스터 노드에도 Pod 를 생성한다면 아래명령을 수행해 준다.

# kubectl taint nodes --all node-role.kubernetes.io/control-plane-

=============================================================================================

kube-flannel.yml 추가

# kubectl create -f kube-flannel.yml

# kubectl get nodes

- master 노드 ready 인지 확인

# kubectl get pod --all-namespaces

- Running 상태인지 확인

- Coredns 펜싱 상태 일 경우 마스터 노드 재부팅

- 재부팅 이후 coredns running 상태면 슬레이브 조인 실행

=============================================================================================

[WORK NODE]

아래 명령을 이용해 워커 노드를 추가할 수 있다.

# sudo kubeadm join node-01:16443 --token qelzz0.dq8fpXXXXXXXXXXX \

--discovery-token-ca-cert-hash sha256:160c9543e023b599d6cb624XXXXXXXXXXXX

################## 워커노드 소켓 지정 #############################

kubeadm join 10.20.100.40:6443 --token z7v4y0.c41zdeeroehfatwl --discovery-token-ca-cert-hash sha256:3c0ad3e851e14a56b8ec86dc958a7203c76aa57986ee3da1c33b21c26375f4ec --cri-socket unix:///var/run/cri-dockerd.sock

##########################################################

# kubectl get nodes 가 Pending 상태이면 아래명령을 수행하자.

# sudo systemctl restart containerd

=============================================================================================

=============================================================================================

[MASTER NODE ADD ]

기존 마스터 노드 // 마스터노드 토큰 생성

[root@bookclubDEV01 ~]# sudo kubeadm token create --print-join-command

kubeadm join 10.20.100.40:6443 --token cvoqet.h0nxmm1u2d6rcj7w --discovery-token-ca-cert-hash sha256:3c0ad3e851e14a56b8ec86dc958a7203c76aa57986ee3da1c33b21c26375f4ec

기존 마스터 노드 // 인증서 생성

[root@bookclubDEV01 ~]# sudo kubeadm init phase upload-certs --upload-certs

I0731 14:42:57.630461 2880898 version.go:256] remote version is much newer: v1.30.3; falling back to: sta ble-1.27

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

c9c98fd5c8a98e757db7169414ff5a4777594ff0882418757aac5d0e5b9fcb15

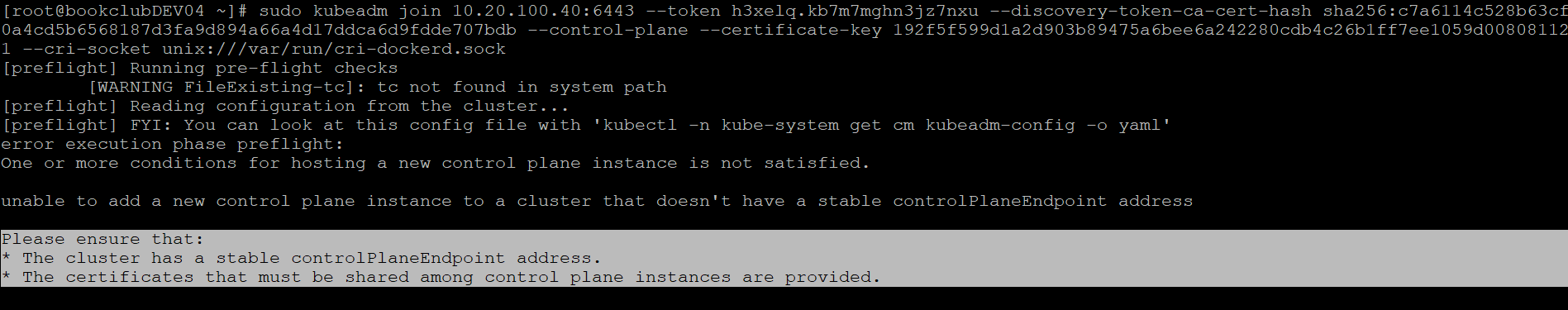

[NEW MASTER NODE ]

신규 마스터노드 // 토큰 / 인증서 키 / CRI 지정

[root@bookclubDEV04 ~]# sudo kubeadm join 10.20.100.40:6443 --token cvoqet.h0nxmm1u2d6rcj7w --discovery-token-ca-cert-hash sha256:3c0ad3e851e14a56b8ec86dc958a7203c76aa57986ee3da1c33b21c26375f4ec --control-plane --certificate-key c9c98fd5c8a98e757db7169414ff5a4777594ff0882418757aac5d0e5b9fcb15 --cri-socket unix:///var/run/cri-dockerd.sock

sudo kubeadm join 10.20.100.40:6443 --token y6s42d.jvqxxb8eiyieg4yn --discovery-token-ca-cert-hash sha256:4d75dcfeefe8c48e2a2a4cc43e32ee8377dbce973fec64fd7a345520d49ea77f --control-plane --certificate-key 7cec3d24f5b4d8485181c06c4f3c341cfcf2e79594286d78b40fb01be9643ea8 --cri-socket unix:///var/run/cri-dockerd.sock

######################################24.08.13########################################

클러스터 리더 확인

# curl --cacert /etc/kubernetes/pki/etcd/ca.crt \

--cert /etc/kubernetes/pki/etcd/server.crt \

--key /etc/kubernetes/pki/etcd/server.key \

https://10.20.100.40:2379/v3/maintenance/status

클러스터 컴포넌트 상태 확인

# kubectl get componentstatuses

[마스터노드 신규 조인 오류시 기존 마스터에서 앤드포인트 지정 config 수정 ]

# kubectl edit configmaps -n kube-system kubeadm-config

============================================================================================

토큰 재발급

토큰이 만료되면 아래 명령을 이용해 토큰을 재발급 받을 수 있다.

# kubeadm token list

# kubeadm token delete 토큰이름

# kubeadm token create --print-join-command

=============================================================================================

다시 설치

# sudo systemctl stop kubelet

# sudo kubeadm reset // sudo kubeadm reset --cri-socket unix:///var/run/cri-dockerd.sock

# sudo systemctl start kubelet

# sudo rm -rf /etc/cni/net.d

# sudo rm -rf $HOME/.kube/

=============================================================================================

########## NODE NOTREADY ##########

# curl https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml -O

# vi kube-flannel.yml ( ip 대역 변경 )

# systemctl restart kubelet

# kubectl get nodes

kubectl edit cm coredns -n kube-system

=============================================================================================

Calico 설치

# kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.24.1/manifests/tigera-operator.yaml

# vi calico-resources.yaml

# This section includes base Calico installation configuration.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

# Note: The ipPools section cannot be modified post-install.

ipPools:

- blockSize: 26

cidr: 192.168.0.0/24

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://projectcalico.docs.tigera.io/master/reference/installation/api#operator.tigera.io/v1.APIServer

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

# kubectl create -f calico-resources.yaml

=============================================================================================

kubectl 자동완성 설정

탭하여 명령어 자동완성 기능 설정

# sudo yum install bash-completion -y ## 레드헷

# apt-get install bash-completion -y ## 우분투

# source /usr/share/bash-completion/bash_completion

# kubectl completion bash | sudo tee /etc/bash_completion.d/kubectl > /dev/null

# echo 'alias k=kubectl' >>~/.bashrc

# echo 'complete -o default -F __start_kubectl k' >>~/.bashrc

# source /usr/share/bash-completion/bash_completion

# 터미널 다시 접속

'IT > 기타' 카테고리의 다른 글

| Kubernetes INGRESS 생성 (0) | 2025.02.17 |

|---|---|

| Kubernetes 내부망 이미지 사용 시 (0) | 2025.02.17 |

| 로컬 gitlab -> docker 컨테이너 전환 (업그레이드 및 마이그레이션 포함) (0) | 2025.02.17 |

| Docker (nginx+tomcat+mysql) - 2 (0) | 2025.02.17 |

| Docker (nginx+tomcat+mysql) - 3 (0) | 2025.02.17 |